What Is an AI Maturity Model?

A maturity model is a framework used to assess how well your organization uses a

Streamlining Quality Assurance with Automated Testing for Faster Results

A leading sales and marketing tech organization partnered with Applied AI Consulting to enhance the quality assurance of their campaign analytics application. The analytics application provides users with comprehensive insights into campaign performance. Its core functionality revolves around generating reports based on selected instructions, enabling users to gain valuable data-driven insights to optimize their campaigns.

The application is organized into several key modules, each playing a critical role in delivering a complete analytics experience.

Dashboard:

Displays campaign data visually through charts and graphs, offering an overview of campaign performance. It also provides key information on campaign assets and leads.

Campaigns:

Lists all current and past campaigns, showing important metrics like assets, leads, and total campaign value. This module helps users track and analyze the performance of individual campaigns.

Report Module:

Allows users to generate reports based on campaign data by selecting specific FAQs. These reports offer detailed insights into campaign outcomes, customized to the user’s needs.

Templates:

Provides pre-built templates that users can apply to generate reports across different campaigns, saving time and ensuring consistency in report creation.

FAQs (Frequently Asked Questions):

For each FAQ, report generation instructions are crafted using ChatGPT prompts. Based on these predefined instructions, the system generates corresponding reports. When a user selects an option from the FAQ list, the system utilizes the instruction linked to that selection to generate the report automatically.

User Management:

This module enables administrators to manage user accounts, assign roles, and define access levels, ensuring secure and efficient use of the platform.

This modular structure offers flexibility and scalability, allowing users to quickly generate customized reports and gain actionable insights from their campaign data.

Dependency on Manual Testing

Manual testing was the primary method for testing new features, causing delays and limiting scalability as it consumed significant time and resources for each release.

Incomplete Automation Framework

Although an external expert had initiated the automation framework (Cucumber Selenium with Java), it required further completion and alignment with the development team to be fully functional.

Framework Instability & Resource Strain

Frequent failures in the automation framework during daily pipeline executions led to unreliability, forcing the QA team to rely on manual testing for around 40-50% of the process, especially as new features were continuously added.

Coordination Challenges

The team struggled to synchronize manual and automation testing efforts with developers, particularly due to the division of responsibilities between the two testing methods.

A maturity model is a framework used to assess how well your organization uses a

Applied AI Consulting is proud to announce a strategic partnership with Nisum, aimed at delivering

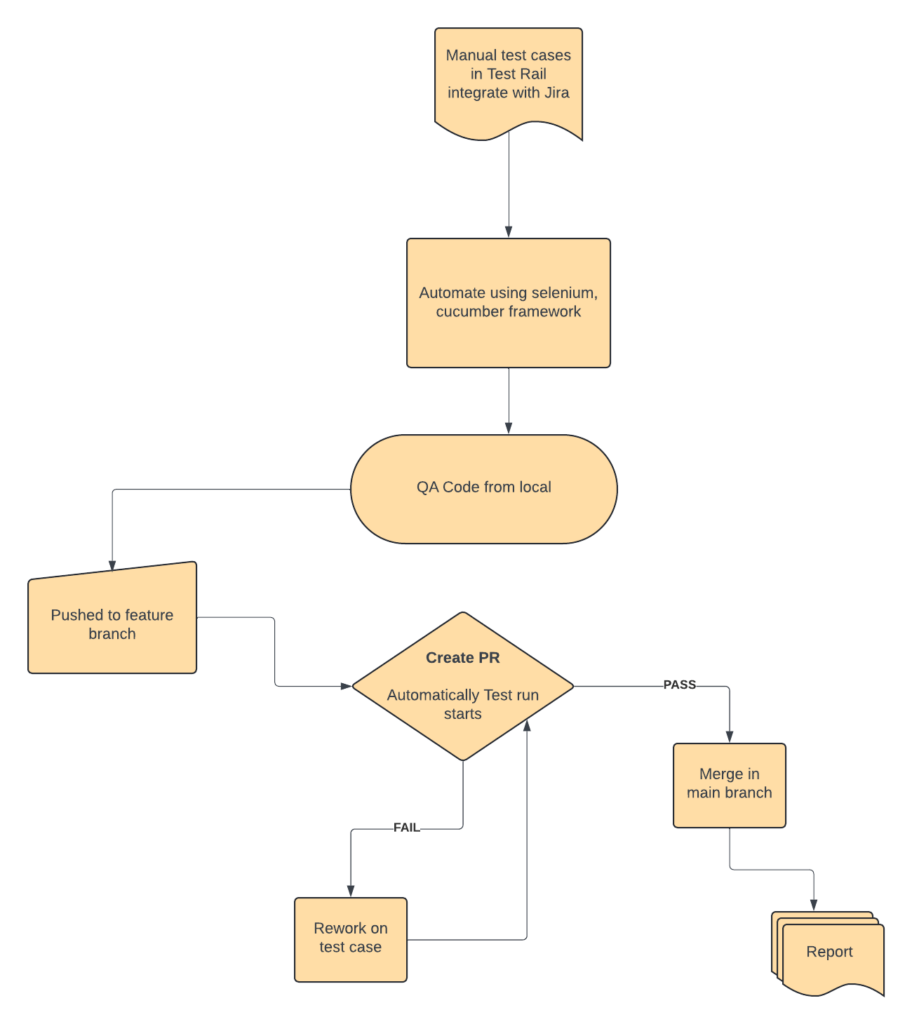

The team completed and stabilized the Cucumber Selenium framework (Java-based) that had been left incomplete by an external expert. By addressing failures, they ensured reliable automation execution, significantly reducing manual intervention.

Three key test packs were automated:

* Sanity Test Pack (25 test cases across all environments)

* Regression Test Pack (100+ daily tests via Jenkins)

* Post-Deployment Sanity Checks to monitor application health after each deployment

Automation was expanded across Develop, Staging, and Production environments, ensuring thorough sanity and regression testing. This reduced manual testing efforts by 40-50%, allowing the team to focus on testing new features and improving overall efficiency.

The QA team successfully stabilized the Cucumber Selenium framework, integrating it into the deployment process. This resulted in reliable daily test executions, with failure rates dropping to 5-10%, and ensured no major bugs reached production.

Introducing structured test packs—Sanity, Regression, and Post-Deployment Sanity Checks—optimized the testing process, saving 70% of the time previously spent on manual testing. Automation provided accurate and timely reports, enhancing QA efficiency.

Automation saved approximately 70% of the time previously required for manual testing and reduced QA costs by 50-60%, especially during the development phase. This efficiency accelerated production releases and reduced overall expenses

Automation achieved a remarkable 70% reduction in time previously spent on manual testing and cut QA costs by 50-60%, particularly during the development phase. This boost in efficiency facilitated quicker production releases and lowered overall expenses.

By minimizing human errors, automation enhanced testing accuracy by 70%, leading to more consistent outcomes and higher-quality production deployments. This improvement ensured that the software met stringent quality standards with every release.

Daily automated test runs and post-deployment sanity checks ensured ongoing application stability. This approach enabled regular updates without jeopardizing core functionalities, while Jenkins pipelines and Slack notifications provided swift issue resolution.

Continuous Expansion of Automation Coverage

As new features and functionalities are introduced, the automation framework can be continuously expanded. By regularly integrating new test cases into the existing test packs (e.g., sanity and regression), the framework will be able to handle future product updates efficiently, further reducing manual testing efforts.

Cross-Environment Automation

The current setup, which supports automation across Develop, Staging, and Production environments, is scalable to additional environments if needed. Automation can also be extended to handle complex integration tests, performance tests, and end-to-end scenarios.

Advanced Reporting and Analytics

Integrating advanced analytics into the testing pipeline will provide deeper insights into trends, failure patterns, and performance, helping to predict issues earlier in the development cycle. This can lead to smarter decisions for future releases and improved test case management.

CI/CD Pipeline Optimization

As the CI/CD process is further streamlined with tools like Jenkins, there is room to optimize the pipeline with more frequent releases, quicker feedback loops, and integration of newer DevOps tools to enhance automation efficiency.

The shift towards automation in the project has greatly improved the quality assurance process, reducing both time and cost while delivering more reliable, accurate test results. Automation helped resolve 70-80% of the issues and stabilized the testing framework, contributing to faster production releases with minimal failures. The introduction of structured test packs for sanity, regression, and post-deployment checks ensured consistent validation across all environments.

With the successful integration of automation, the project is well-positioned to scale its testing process as new features are introduced, ultimately providing ongoing business value through time savings, cost reductions, and improved accuracy. Continuous improvements in the automation framework and CI/CD pipeline will further enhance its capability to support rapid and reliable software deployments in the future.

— Head of QA Engineer

Sorry, we couldn't find any posts. Please try a different search.